Is it OK to take organic human artwork without consent and build a machine to create visual artifacts in the same style and expression? Is it OK to make money from it? Can we restrict access to it, or does it belong to humanity? Do artworks created by an AI have any value? Can only moral, flesh-and-blood humans create art? Can AI be compared to a paintbrush or Photoshop, a tool used by the artist to create?

Ideogram AI cover image prompted by jbulian

Update May 12 2025: United States Copyright Office on Copyright and Artificial Intelligence "Various uses of copyrighted works in AI training are likely to be transformative. The extent to which they are fair, however, will depend on what works were used, from what source, for what purpose, and with what controls on the outputs—all of which can affect the market.

When a model is deployed for purposes such as analysis or research—the types of uses that are critical to international competitiveness—the outputs are unlikely to substitute for expressive works used in training.

But making commercial use of vast troves of copyrighted works to produce expressive content that competes with them in existing markets, especially where this is accomplished through illegal access, goes beyond established fair use boundaries. “

Today it seems we only have questions, no answers.

The ethics debate quickly becomes emotional. Doctors, copywriters, developers, musicians, designers, influencers, brokers, teachers, animators, lawyers, accountants - it touches every aspect of society.

Ethics or moral philosophy is a branch of philosophy that "involves systematizing, defending, and recommending concepts of right and wrong behavior". (Wikipedia)

Diffusion models (images, audio, video) and Large language models (text) change everything. Legal frameworks and moral judgements will always lag behind, they can never anticipate, they can only react.

Marc Andreessen, co-founder of Netscape and creator of the first web browser Mosaic, sees AI-powered art as a glorious golden age.

Others are not so sure. Yuval Harari, Tristan Harris and Aza Raskin writes...

What would it mean for humans to live in a world where a large percentage of stories, melodies, images, laws, policies and tools are shaped by non-human intelligence, which knows how to exploit with superhuman efficiency the weaknesses, biases and addictions of the human mind — while also knowing how to form intimate relationships with human beings? In games like chess, no human can hope to beat a computer. What happens when the same thing occurs in art, politics, and even religion?

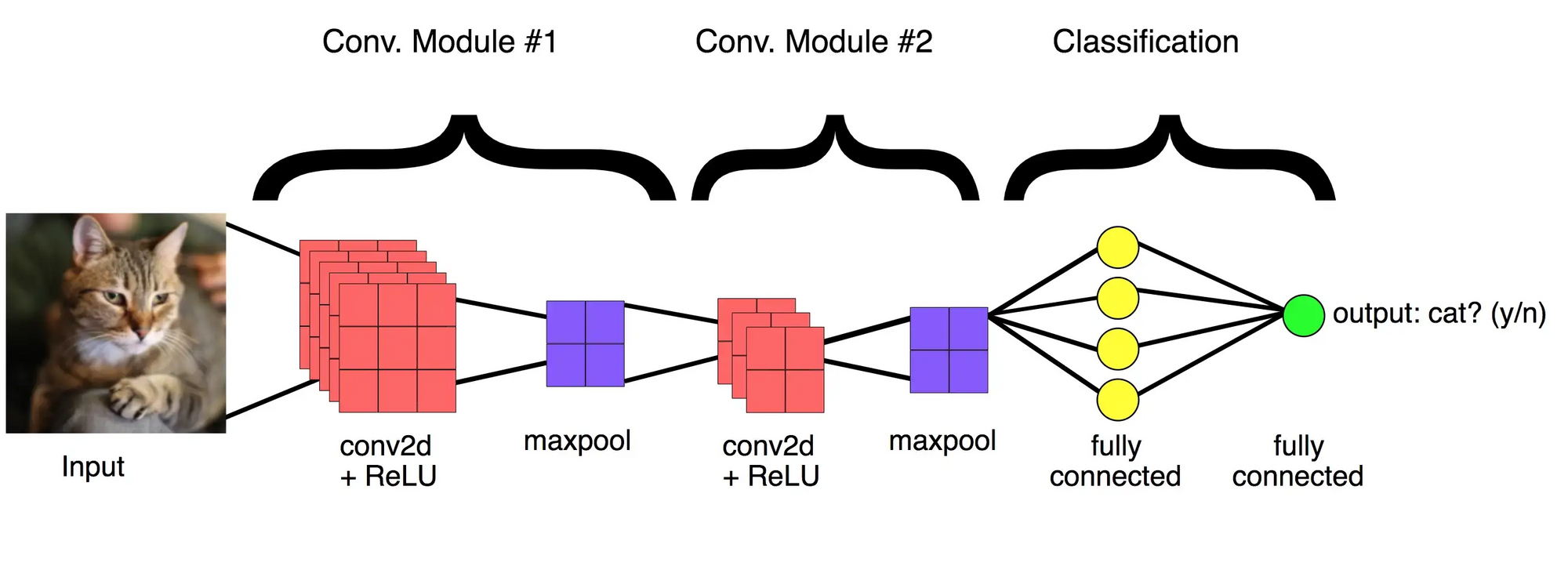

Neural Networks mimics the function of our brain

We feed the neural network some kind of input, and the network makes a guess. With some way of telling it how well it did, the network will adapt and try to make a better guess next time. After a while, the network will make very good predictions. All neural networks need some kind of input.

For the Midjourney AI the training input was text and images from datasets by German non-profit laion.ai and additional Internet-scraping. The network learned to generate images from text prompts. For LLM Large Language Models (ChatGPT, Claude, Gemini..) basically all content available on the internet was scraped and used as training data.

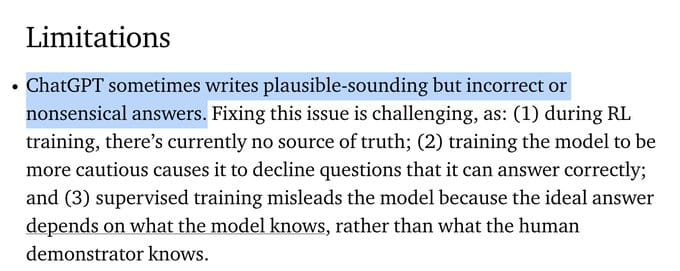

LLM's do not "know" anything. They are just crunching numbers and calculating probabilities. Here is the disclaimer for ChatGPT re limitations.

OpenAI's chatGPT was tricked/prompted into revealing its raw training data by a team of researchers primarily from Google’s DeepMind, 404 media wrote about this..

..yet another reminder that the world’s most important and most valuable AI company has been built on the backs of the collective work of humanity, often without permission, and without compensation to those who created it.

Understandably, creators are upset

Midjourney AI has learned the artist's individual style, the unique feeling and expression. Here a comment from Twitter..

For the sake of artists who have had their art ripped from them to be fed into these programs without consent, don't use this. For the sake of artists who are being accused of theft for THEIR OWN WORK that someone stole to finish in AI, don't use this

Copywriters...

have been through this already - enter a text prompt and get well written copy. Here's "How to feed a crocodile" by Neuro-flash AI

In addition, feeding crocodiles can also help to deter them from attacking humans or livestock. If they know that there is an easy food source available, they are less likely to see us as potential prey. This can help to reduce the number of attacks that occur each year.

The writing style and tone-of-voice embraced by the AI - it had to start from somewhere. They all have to be trained on existing data.

Software developers

... are surprised find their work showing up in code generated by Github Copilot, a programming-AI by Microsoft. The original work distributed under a GPL open-source license requires anyone using it to credit the author, something Copilot is not doing, or possibly unable to do.

So, who owns the work created by an AI?

The generated AI image is a mixture of many images & styles - and the minds of the software architects, programmers and trainers who made the AI system possible. "Fair use" is often cited as a legal reason for using images and text available on the internet as training data for large language models.

In November 2022 a class-action lawsuit was brought against Stability AI, DeviantArt, and Midjourney by Matthew Butterick for their use of Stable Diffusion in "remixing the copyrighted works of millions of artists whose work was used as training data."

New York Times sued OpenAI / ChatGPT and Microsoft's Bing in December 2023, requesting a jury trial. Read a broken down version by AI & IP lawyer Cecilia Ziniti on Twitter.

Publicly, Defendants insist that their conduct is protected as “fair use” because their unlicensed use of copyrighted content to train GenAI models serves a new “transformative” purpose. But there is nothing “transformative” about using The Times’s content without payment to create products that substitute for The Times and steal audiences away from it. Because the outputs of Defendants’ GenAI models compete with and closely mimic the inputs used to train them, copying Times works for that purpose is not fair use.

OpenAI has a different viewpoint

"Because copyright today covers virtually every sort of human expression... it would be impossible to train today's leading AI models without using copyrighted materials," stated OpenAI in its submission.

The organisation argued that "limiting training data to out-of-copyright works would lead to AI systems that could not meet the needs of contemporary society".

Copyright, Fair use, and Attribution

A Twitter thread by @hankgreen was the inspiration for this blog post. He turned his account private for a while "Taking a break because Twitter seems to be more and more on the wrong side of the battle of self righteous outrage vs nuance and complexity." but is tweeting again, not touching AI art controversy.

New legal frameworks on copyright, fair use and attribution are badly needed here.

Possibly we can learn from the introduction of the printing press. Dr. Richard Scott Nokes, professor of medieval literature at Troy University wrote

The printing press didn't exactly put monks or scribes out of work. Monks support their calling in a lot of different ways, so it isn't like the printing press put them out of business. [...] Also, you have to remember that the printing press is essentially only useful for mass production -- just think of all the things you handwrite every single day. I think it's more fair to say that the printing press transformed the job of the scribe.

Artists are getting accused of using AI

Images created by Stable Diffusion and Midjourney AI perfectly emulates the style of the original artist, this happened to @kochi003s

Exceptions are not exceptional

Critically, an AI can not embrace common sense. Yejin Choi, AI researcher and 2022 recipient of the MacArthur grant, explains in New York Times

Let me give you another example: You and I know birds can fly, and we know penguins generally cannot. So A.I. researchers thought, we can code this up: Birds usually fly, except for penguins. But in fact, exceptions are the challenge for common-sense rules. Newborn baby birds cannot fly, birds covered in oil cannot fly, birds who are injured cannot fly, birds in a cage cannot fly. The point being, exceptions are not exceptional, and you and I can think of them even though nobody told us. It’s a fascinating capability, and it’s not so easy for A.I.

I think it needs a human to be of any value - it knows nothing about the world it exists in and the consequences of its actions. Does it make sense to "feed crocodiles to reduce the number of attacks"? What do you feed them? Is it realistic? Might feeding attract more crocodiles?

An AI is always sure, never hesitant or ambiguous. Arvind Narayanan, computer science professor at Princeton who writes about the dangers of AI on Substack , tweeted...

“The danger is that you can’t tell when it’s wrong unless you already know the answer”.

AI and humans will be creating together. It's just a machine, anyway. Doesn't know anything about fear, suffering, hate, hunger, ecstasy, loneliness and our existential condition. It can never be real, only imitate life.

Deeper insights into the AI's world can be found in the research paper Talking About Large Language Models.

"The real issue here is that, whatever emergent properties it has, the LLM itself has no access to any external reality against which its words might be measured, nor the means to apply any other external criteria of truth."

What's next?

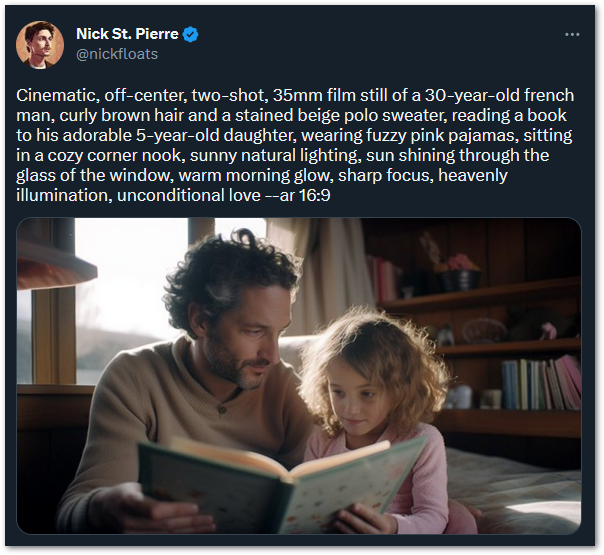

We are only getting started, here's a prompt with Midjourney v5 by Nick St. Pierre. These two people never existed, the room does not exist. This 'photograph' never happened.

Kiri prompted a woman wears the Vision Pro headset with a crown, religious symbolism, greek schemamonk, high fashion editorial, glsl shaders, quantum wavetracing --ar 16:9 --stylize 1000 --v 6 iri

Image below by @OrctonAI "An emotional scene from a drama that won the Oscar for Best Actress. The scene captures a poignant moment where the lead character, a young woman, is standing on a cliff overlooking the sea at sunset, letting go of a bird from her hands. The sun's warm glow illuminates her face, highlighting her mixed feelings of sadness and hope. The image should capture the young woman's emotional expression, the beautiful sunset, and the sense of freedom as the bird takes flight. --ar 3:2 --v 5.1 --s 1000 --q 2 --ar 3:2 --v 5.1 --s 1000 --q 2"